Edition 23

So some more on Gen ai (of course!), crazy shoe designs, self check outs, medication and some other jazz..

Many things are uncertain about Generative AI, but one thing is clear, it’s an exhausting job keeping up. So apologies for the 2 month hiatus, but between writing new keynotes and some hefty consulting, its been busy, I’ll try to get back a more regular cadence from now.

Big ones.

Generative AI, the worst it will ever be, or law of diminishing returns.

We’ve seen some incredible announcements lately, ChatGPT4o was astonishing, Google’s project Astra ( shown here) perhaps even more remarkable, but a couple of questions keep popping up.

One is, this stuff continues to get ever more sophisticated, more bafflingly magical, but not necessarily more useful. The initial use cases continue to be driven by what the technology can do, not what people need help with. The issue remains that people still work around software, software is yet to work around people, for this we need new systems, not patches.

The bigger question is how much better will this stuff get?

Are the problems of Generative AI; hallucinations, errors, bias, overconfidence, simply problems that simply adding more and more data and more computing power can solve?

A group of researchers just published a scientific paper which says that high-quality language data that can be used to train AI will be exhausted by 2026.

That’s only two years from now, and it seems that things can only go downhill from there.

We have already seen similar effects with the previous versions of OpenAI’s models, which effectively got dumber as it ingested more data.

And I mean really dumb, like failing to perform basic mathematical operations.

To make matters worse, as there is a lack of inputs that can be used for training models, texts created by Generative AI are now being used to train other AI models, leading to all sorts of side effects like model collapse.

Even for a layman this sounds a bit absurd, and for those who have not seen the episode of Silicon Valley where AI Dinesh communicates with AI Gilfoyle, it does not end well, even in a comedy TV show.

Is it really that smart?

Generative AI can write functional code or great sentences because it has learned from the rules of a programming language or from examples of texts written by humans in the past 200 years.

It does know the rules, it knows what is typically done, but it doesn’t know why it’s done that way. And since it is based on rules, anything that defies this logic is strange to it.

This is one of these amazing areas where you realize quite how stupid the idea of making it “as good as a human” is.

A seven-year-old could comfortably beat AI at chess most times, but the AI could probably make 10,000 extremely good 2nd and 3rd moves, in the time it makes the poor kid to even see what's happened. So is it better than a human?

Yes and no, because the kid could make a move that would not be the logical next step.

He could sacrifice a pawn or a bishop to create a check-mate constellation in a move or two, and that would only confuse the AI, because from its perspective, those moves make absolutely no sense.

To some extent to get good enough to always beat the kid, Generative AI would need to “understand” chess, not simply parlay out plausible typical moves.

Don’t be fooled

Generative AI for images a good place to see the limitations of the current technology.

I can get AI to render out incredible images of a house in seconds, seemingly “understanding” how big a 8-bedroom house should be, placing bedrooms in sensible places, and placing bathrooms right next to them.

But it's not magic, it's probability.

It has enough information to “know” how houses are typically proportioned, how they are commonly related to roads, what are the most typical layouts… and how to sort the pixels and create the digital image I was looking for.

The AI that made the image doesn't “know” what concrete is, or what cantilever it can span.

It doesn't “know” what rain is, or how it may flow down the roof of a house.

It doesn't “know” what building code is, or what zoning rules apply, or what size and orientation of window will be cripplingly expensive to cool with AC.

It doesn't “know” or “understand” basically anything. It just follows the patterns.

But could this be solved by scale? Does more data create something akin to understanding? How much data? These questions become philosophical because if you break each requirement down into separate things ( A building that fits code, a building that doesn’t have too much insolation, a building that works for floor elevation, in theory it’s all possible, and just a matter of data and power )

Or, do we need a different thing?

Chess can be played very well by computers, but it's a different form of AI than the one you currently use to accelerate your online research for your midterm paper, or to create yet another generic post for your company’s blog.

And no, that one is not as smart as you think it is either.

Because it doesn't think. It just computes.

2- The skinny on weight loss medications

The lines between prescription drugs, wellness, beauty, health and cosmetics continue to blur. Slimming drugs like Ozempic have become consumer brands in their own right, making the marketplace extremely turbulent. Direct to consumer platforms like Ro Health, NURX, and Hims continue to drive interest in (and prescriptions for) medications, once considered the domain of doctors. Now we see a brilliant regulatory hack from Hims, using the FDA shortage of Ozempic and other medications to make a compounded version, which allows them to circumvent patent protection and the FDA approval process. Somewhere a lawyer is earning his bonus, since hims’ share price rocketed on the news that Ozempic could now be bought for about 1/5th the price.

3- Self Checkouts Out

Dollar General is one of the many retailers turning their back on Self Check out, this makes quite good sense. One of the weird things about self checkouts is they've become something that has created an enormous amount of furore, like everything in the modern world. A lot of people absolutely hate them.

For me it aways seems extremely easy to think about where they belong and where they don’t, to use empathy and think about people’s needs.

Never force people to use them, have them as an additional option to increase capacity.

Don’t use them where labor is cheap and abundant

Don’t use them in places where it’s a quite awkward and complex purchase experience (like duty free stores)

Think about your customer base and their needs and behaviors

Little ones.

1 - Allbirds, a tech company that just happened to make shoes is in trouble, Who else is? Another reminder that if you sell running shoes you’re in the fashion or sport ware industry, not the tech industry. You will live or die based on whims in desire, fickle tastemakers and because of the quality of your designs, not software. How many more times will we need to remember this.

2 - “I'm happy to offload navigational skills to my phone, but I hate it when my phone starts auto-suggesting answers to people's messages” A rare piece that examines the relationship between technology and how we think and what it means to us.

3 - Annoyed by Consultants who cherry pick data to make their point, and authors too, this was a good critique on their flaws. Rules are hard.

4 - Where Technology and regulation meet, there is always a tension, especially in Germany where people seem to have an awkward relationship with automation, privacy, security and the future. So now automated stores are forced to close on Sundays.

5 - Do we hate simple technology that actually works, or are we limiting what we can do by the wrong software, the comments on this thread are interesting, what happens when a Formula 1 team manages 20,000 parts on Excel.

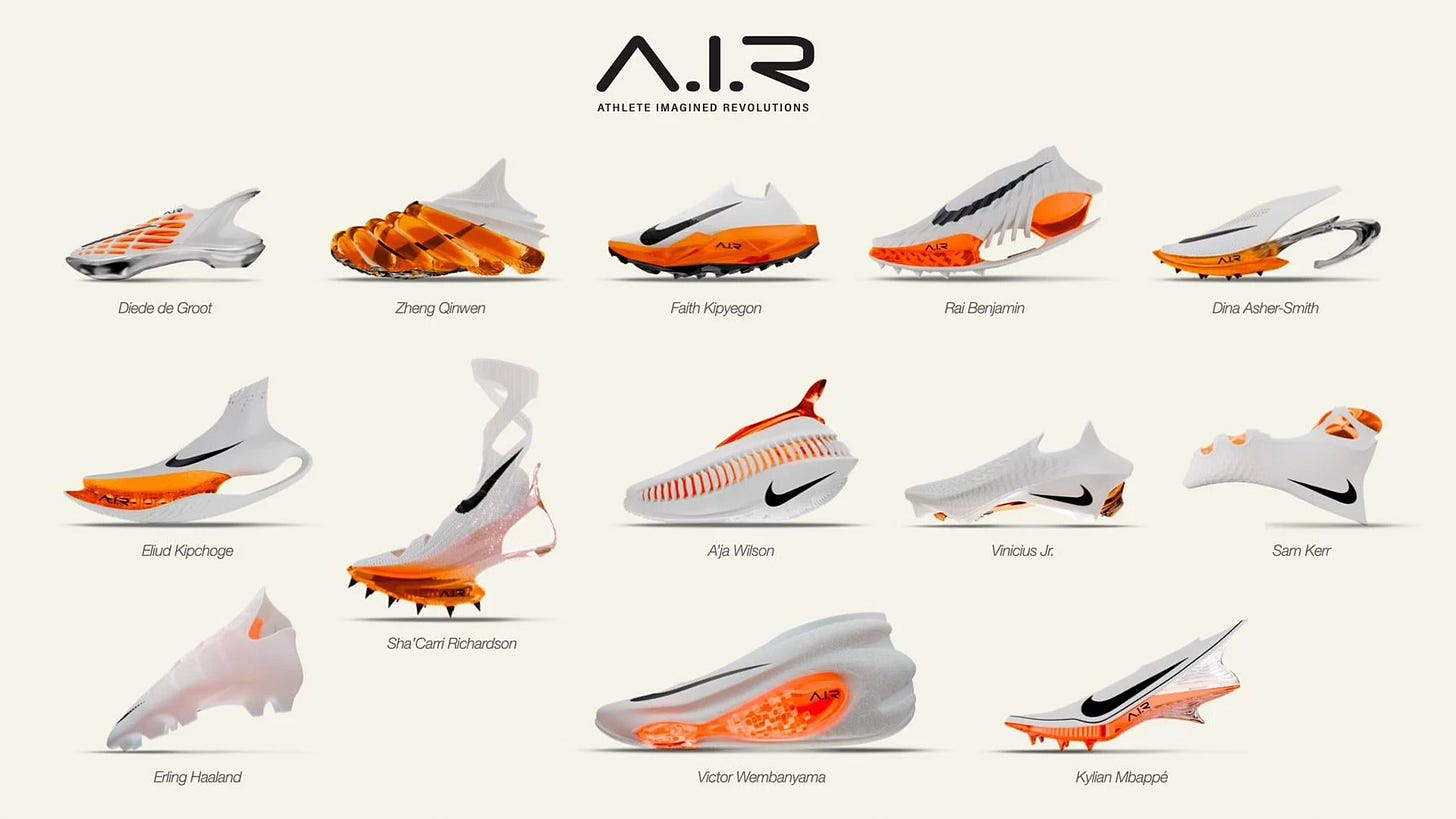

6 - I’m always fascinated by the creative process, and AI has powerful implications on it. On the one hand it seems absurd to think algorithms can be good at “ideas” , but on the other hand they can try billions of unlikely combinations in seconds, they have no fear, no embarrassment, they don’t try to make the audience happy, in some ways when Nike uses AI to make shoes, we get something both preposterous but also many potential breakthroughs.

7 - Reflecting on a decade of tech predictions, 5 lessons learned from the biggest mistakes and misjudgments.

8 - I love this piece on a new CEO taking on digital transformation at Ferrari, good to see someone who hates bureaucracy as much as me.

Me, me, me.

The last few months I’ve been presenting a new Keynote on how AI can really be used by Business it’s taken me to Amsterdam, Sao Paulo, Cape Cod, Barcelona and more.

I’ve also been doing presentations on the big themes in Advertising. I’ve got upcoming presentations open to the public in London (July 2nd-4th), and at ADMA in Sydney (August 20th).

Last thing from me, I’ve just created some new workshops on AI for Business. As you can tell, I’m passionate about using AI to do things better better, create what doesn't exist yet. Get in touch if of interest.

Thats it for now.

AI reminds me of the old jokes about software designed by programmers for programmers. Scr3w the customer; let's put in what we want!

Among the things I do, I teach Freshman Composition to college students. I DO NOT WANT essays written by ChatGPT. That is a form of plagiarism, which clearly refers (in part) to submitting work that one did not write oneself. If AI wrote it, then technically, no one wrote it, given that AI output is not copyrightable.

I am sick to death of the AI-generated image cr@p that wouldn't know how to render any of this non-art if it weren't trained on the distinctive output of a bajillion real artists, none of whom consented to have their work used as "training material."

And now we have Adobe Systems' revised TOS that states they can and will use any customer work to train their models.

Is it any surprise that people are upset? In part, the collective reaction is a resounding "Did you ask me if I want this cr@p? And given that you didn't, how are you going to react to my desire to make this stop?" And who gave you the right to think this grand-scale appropriation is OK?

I don't want glue in pizza sauce. I don't want to use rocks as nutritional supplements. I don't want law briefs full of fictional case law or 6-fingered photos or any of the rest of this talentless slime.

And as to self checkouts—I can get through the checkout lane faster, ensure that items are bagged for optimal efficiency when I get home, and I don't have to deal with a cashier who licks their fingers to open the bags and expects me to make small talk while they try to hustle me through a slow line. Why not focus on my actual convenience for a hot second?

My money is on diminishing returns, re: generative AI. I'm also betting the appeal of the AI aesthetic trends out sooner than later. It's hard for me to imagine something so un-precise being used generatively by commercial enterprises.